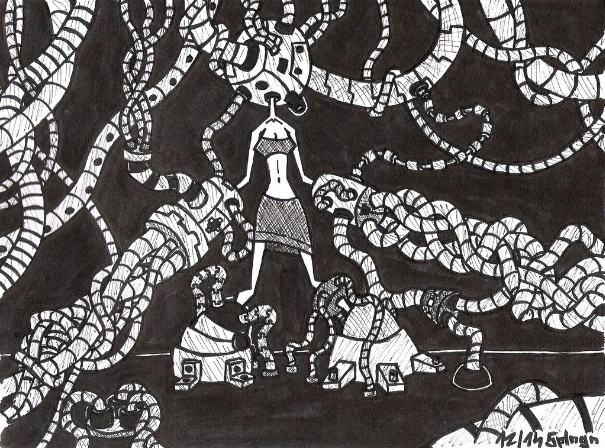

The passive user is not only unable to have a say in how digital technology mediates their everyday life, but can’t even see how it does so. IMAGE CREDIT: SPLENGUIN /DEVIANTART

Recently, I had the privilege of giving a brief presentation at the Southern California Code4Lib Quarterly Meet Up on Commons in a Box (CBOX), an open source platform for setting up digital commons at educational and scholarly organizations. While I’ve long been a passionate advocate for CBOX and its role in higher education—I still fondly refer to it as my “fourth committee member”—I am still only beginning to get to know the rich world of “technology folks” within the broader library community. So far, however, I’ve been deeply impressed by their commitment to community values and their ability to organize highly-complex, multi-institutional technical collaborations such as the Fedora Repository. Thus, as someone who is looking for ways to further grow open source software initiatives for humanities research and education, I look to this community as a model and an ally. The following is a summary of presentation on why I think it’s necessary that universities begin to implement community-driven software projects such as CBOX.

The past few times I’ve talked about CBOX, I’ve emphasized the platform as a means for universities and colleges to cultivate a participatory campus culture, where the traditional private processes of learning and research are opened up to generate academic community, public resources, and greater public visibility of humanistic engagement. Through its range of communicative tools such as blogs, forums, file sharing, messaging, and activity feeds, CBOX users are able to creatively expand the aims of their research, teaching, and learning.

Today, however, I’d like to talk about a different asset of CBOX that goes beyond any one of its particular features. That is, I’d like to talk about how the platform itself, as an open source tool developed by academics for academics, represents a mighty step forward in participatory infrastructure for higher education.

I use “participatory infrastructure” here to signify digital infrastructure whose mechanisms are transparent to its user community, whose user community is able to critically assess the ways in which it affects and mediates their community, and which is designed to invite continued development by that user community. Participatory infrastructure requires open technical protocols and social organization that encourages all users, regardless of expertise, to participate in discussions regarding that infrastructure’s ongoing development. Or, in short, as I joked with the Code4Lib audience, participatory infrastructure is like “inviting the masses into the control room and handing them a wrench.”

Of course, I was being somewhat facetious with that last remark. Software development is complicated enough in a quiet, well-ordered room full of experts; it is hard to imagine how a digital platform of any value or reliability might be run by the so-called “masses.” It is equally difficult to imagine what sort of user would possibly appreciate the gesture. In the long and hard struggle of making software as painless and accessible as possible for the general user, we have as a culture taken invisibility to be one of the chief ideals of digital infrastructure.

Nonetheless, the spirit of my joke was also in earnest. Invisible infrastructure may be convenient infrastructure, but it is also highly problematic. Its seamless entrance into the lives of today’s 3.4 billion Internet users has made it very difficult for us as a public to grasp, discuss, and make decisions regarding its political effects. For example, users are typically unaware of how internal mechanisms encourage and discourage certain types of user activities or enable and disable certain types of user communities. Software under this model also typically conceals what sort of user data it collects, what insights are generated with that data, and whose interests those insights serve. The effect has been pedagogical in that it has taught us to not care, or not care enough, about the following questions:

What do digital companies do with the 1.8 million megabytes of data produced by every average American office worker every single year? How should they get to profit from it? To what degree should they have to disclose their practices to the users or the general public? How would we know if they don’t? Who is entitled to this data and from whom must it be protected? Health insurance companies? Car insurance companies? One’s employer? One’s government? This year? Next year? Should the user herself have rights to it? Is there any difference between data collected for the purpose of consumer surveillance versus data collected according to the will and interest of the public? Do we even adequately understand what sorts of insights data scientists can make about users past, personality, lifestyle, and future decisions? Do we know how those insights are being used and are we confident that they will not be used at the expense of the public good?

How do algorithms shape the way news and knowledge is circulated, how opinions are formed, how votes are cast? How does digital infrastructure determine who has access to knowledge and who doesn’t, whose voices are heard and whose are obscured, whether conflicting views are hidden from one another, used to inflame one another, or productively engage in dialogue? How do digital platforms shape our assumptions about what knowledge even is, how it is made, and what it can do?

Questions along these lines are brought up time and again but so far have yet to result in a widespread practical response. For one, it is near impossible for communities to research how platforms mediate user behavior and the flow of information as the algorithms and practices of the most popular platforms are private and thus unavailable for analysis. For another, it is very difficult to escape these platform’s grip. As Bruce Schneier writes, “These are the tools of modern life. They’re necessary to a career and a social life. Opting out just isn’t a viable choice for most of us, most of the time; it violates what have become very real norms of contemporary life.” Thus, able neither to escape nor study the digital infrastructure which mediates so much of our lives, our freedom diminishes while our ignorance grows. The effect is reproductive. As we continue to conceal the nitty gritty details of digital infrastructure from the everyday user, we continue to deny her the opportunity of understanding the intertwined political and technical issues at play in its use. And thus it remains nearly impossible for us as a public to imagine how digital infrastructure might be any other way, and how it might serve us better as a democratic public.

This situation has in effect produced what I call the passive user, or the user who is not only unable to have a say in how digital technology mediates their everyday life, but can’t even see how it does so. And while digital infrastructure within the university provides very valuable services and resources that we must protect and sustain, we must also consider how these services on their own might contribute to the mass production of this passive user. If we take the political stakes of digital infrastructure seriously, we must look for ways to develop less passive, more participatory relationships with software within higher education.

I want to be clear: I am not arguing for universities and users to “opt out” of the very valuable digital services that for a multitude of political, practical, and proprietary reasons conceal their internal mechanics from users’ view. But I am arguing for the need to identify sites within the university in which participatory infrastructure might be meaningfully practiced, even if in small ways. These ventures will necessarily be experimental and exploratory–at least in the beginning; they will also require a significant amount of social engineering. Facilitators will have to consider how they will generate student interest, how they will organize, legitimate, and reward labor, what sort of participatory capacities will be made available, and how to financially sustain the endeavor. And they will also obviously need to choose an infrastructural site, such as a digital tool or platform, which they might reasonably open up to participation.

While there are conceivably a myriad ways of doing this, I’d like to point to CBOX as an exemplary model. Not only has it involved numerous scholars, educators, and students in discussions pertaining to its maintenance and development at The CUNY Graduate Center, but its software is used to great success by organizations and institutions across the country. Though not explicitly enacting “participatory infrastructure,” the fact that it is developed by a team composed largely of academics deeply embedded in an academic setting has in many ways shrunk the divide between the makers and users of that software. Being part of that community while at The CUNY Graduate Center was one of the most educative experiences of my schooling to date. As it becomes increasingly clear that digital technology mediates virtually every aspect of social and political life, it is urgent that we acculturate, not just educate, our entire civic body in infrastructural citizenship. While facilitating participatory infrastructure will certainly pose challenges, it’s high time that experts aren’t the only ones in the control room.